Creating a Glean index for React

See part 1 (creating a working aarch64 env on Mac) and part 2 (building Glean for ARM).

In the last post we got a working Glean installation built on aarch64 with native emulation on the ARM-based M1 MacBook Air. To be useful, we need to “index” some code and store the code facts in Glean. See (What is Glean?).

Indexing is the process of analysing source code and logging the things we find. Indexers look a lot like parsers or compiler front-ends: they take source code and build up semantic information, then record it to files for ingestion into Glean, or write directly to the database.

Almost all linters, static analysis tools, compilers, transpilers, formatters parse the code, analyse and write out transformed results, usually throwing away what they learned in the process. What Glean lets us do is efficiently persist the semantic information the compiler discovered, in a way that can be very efficiently queried. We turn compilers into data sources for a distributed cache.

This splits up the usual compiler frontend <-> backend coupling, with Glean as a cache between the two phases.This lets us scale: we can index a repo frequently, and share the results with thousands of engineers, or support millions of concurrent queries to a Glean database, with very low latency. It’s like the compiler AST and type environment are now in memcache, and our IDEs and code analysis tools can hit the cache instead of painfully reconstructing the frontend themselves.

What makes a good index?

What we index is described by a Glean schema written in Angle. The schema describes the types, predicates (tables) and references between facts, as well as how they are represented. It’s broadly “pay as you go” — you don’t need to capture everything about the language, but just what you need for specific tasks. There are schemas for most common languages, as well as many mini-languages (like GraphQL, Thrift, Buck). (But n.b. there aren’t _indexers_ for most languages, just schemas. The indexers a quite a bit more work as they usually hook into custom compiler toolchains).

Common examples of things we would capture are:

- file names

- declarations, definition locations

- uses of definitions (“xrefs”)

- language elements: module names, type names, functions, methods, classes, ..

That’s usually enough to get a working navigation service up (e.g. jump-to-definition or find-references). For more sophisticated static analysis you will need to capture more of the compiler environment. It’s a good idea to float out strings and values that are repeated a lot into their own predicates, so as to maximise sharing. And to have a sense of the queries you need to write when constructing the index schema.

Once you have a schema, you can store data in that format. Indexers are standalone programs, often with no dependency on Glean itself, that parse code (and add other type information, resolve names, resolve packages), before writing out lines and lines of JSON in the schema format you specified (or writing directly to the Glean db over binary thrift).

Ok, let’s index some JavaScript

Let’s see if we can index the React codebase. React is written in JavaScript, and uses the Flow type system. Flow knows about Glean, and can be run directly as an indexer. My aim here is to use the aarch64 VM as the glean server, but the indexer can run anywhere we want — on any box. We just have to write the data into Glean on the VM. Let’s have a go at installing Flow on aarch64/Debian though, for fun, as arm64/Linux is supported by Flow.

We can build from source (needs opam/OCaml) or install a pre-built binary from https://github.com/facebook/flow/releases . I installed the binary into my aarch64 VM and we are in business:

$ flow/flow --version

Flow, a static type checker for JavaScript, version 0.169.0

We’ll need the React source , so get that. This will be our source code to index:

git clone https://github.com/facebook/react.gitInitialize flow and process the source using flow glean:

$ flow glean packages --output-dir=/tmp/flow-out/ --write-root="react"

>>> Launching report...

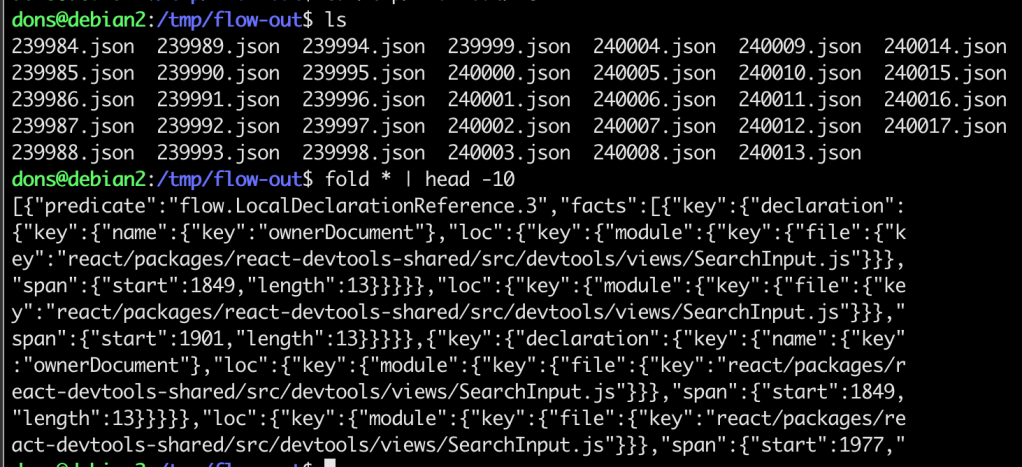

Wrote facts about 787 JavaScript files.And we are in business! What did we index? Have a look in /tmp/flow-out at the raw JSON. The index is in textual JSON format, and are just arrays of predicates + fact pairs. Each predicate has a set of facts associated (and facts are unique in Glean, any duplicates will be de-duped when ingested).

The whole React index is about 58M of JSON, while the raw source code was 17M. We have a few predicates defined with facts:

$ sed 's/"predicate":"\([^"]*\)",/\n\1\n/g' * | grep '^flow.' | sort | uniq

flow.DeclarationInfo.3

flow.FileOfStringModule.3

flow.ImportDeclaration.3

flow.LocalDeclarationReference.3

flow.MemberDeclarationInfo.3

flow.MemberDeclarationReference.3

flow.SourceOfExport.3

flow.SourceOfTypeExport.3

flow.TypeDeclarationInfo.3

flow.TypeDeclarationReference.3

flow.TypeImportDeclaration.3The definitions of these predicates are in flow.angle, which define all the possible predicates we might have facts for in Flow/JavaScript. Looking at the first entry in the first file:

[{"predicate":"flow.LocalDeclarationReference.3"

,"facts":[{"key":{"declaration":{"key":{"name":{"key":"ownerDocument"},"loc":{"key":{"module":{"key":{"file":{"key":"react/packages/react-devtools-shared/src/devtools/views/SearchInput.js"}}},"span":{"start":1849,"length":13}}}}},"loc":{"key":{"module":{"key":{"file":{"key":"react/packages/react-devtools-shared/src/devtools/views/SearchInput.js"}}},"span":{"start":1901,"length":13}}}}}

,{"key":{"declaration":{"key":{"name":{"key":"ownerDocument"},"loc":{"key":{"module":{"key":{"file":{"key":"react/packages/react-devtools-shared/src/devtools/views/SearchInput.js"}}},"span":{"start":1849,"length":13}}}}},"loc":{"key":{"module":{"key":{"file":{"key":"react/packages/react-devtools-sha..We can parse this fact as:

There is a “LocalDeclarationReference” of “ownerDocument’ in react/packages/react-devtools-shared/src/devtools/views/SearchInput.js at bytespan 1849, length 13. which has a reference at offset 1901.

Seems plausible. The structure of schema tells us the shape of the JSON we need to generate. E.g. LocalDeclarationReferences are bytespans associated with the use of a Declaration. Represented in Angle as::

predicate Range: {

module : Module,

span: src.ByteSpan,

}

predicate Name: string

predicate Declaration: {

name: Name,

loc: Range,

}

# connects a variable to its declaration within the same file

predicate LocalDeclarationReference: {

declaration: Declaration,

loc: Range,

}Write the data to Glean

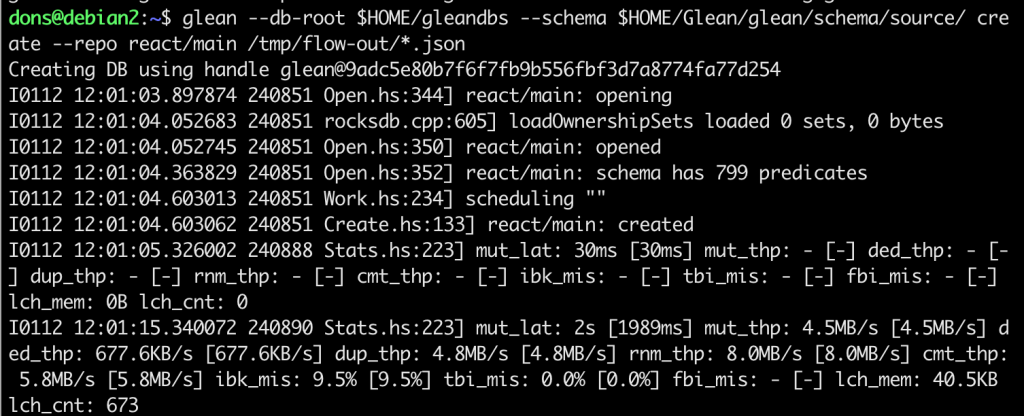

Now let’s ingest that into Glean to query. I’ll make a directory in $HOME/gleandbs to store the Glean db images. We can install the standard schema, or just point Glean at the source. Now load all that JSON in. You can do this in parallel on multiple cores to speed things up — +RTS -N8 -A32m -RTS if it is a very, very big DB, but this is fine to run single threaded.

Our first db will be called “react” with tag “main”, but in a production setting you would probably use the commit hash as the tag to identify the data set. Glean data is immutable once the DB is finished, so its fine to use the commit hash if it is also immutable.

$ mkdir ~/gleandbs

$ glean --db-root $HOME/gleandbs --schema $HOME/Glean/glean/schema/source/ create --repo react/main /tmp/flow-out/*.json

And the Glean RTS does some work:

We can look at the DB in the glean shell:

$ glean shell --db-root $HOME/gleandbs --schema $HOME/Glean/glean/schema/source/

Glean Shell, built on 2022-01-08 07:22:56.472585205 UTC, from rev 9adc5e80b7f6f7fb9b556fbf3d7a8774fa77d254

type :help for help.

> :list

react/main (incomplete)

Created: 2022-01-12 02:01:04 UTC (3 minutes ago)

> :pager on

> :db react/main

> :statUse :stat to see a summary of the data we have stored. It’s already a basic form of code analysis:

flow.Declaration.3

count: 33527

size: 1111222 (1.06 MB) 7.1861%

flow.DeclarationInfo.3

count: 33610

size: 1177304 (1.12 MB) 7.6135%

flow.Documentation.3

count: 2215

size: 61615 (60.17 kB) 0.3985%

flow.Export.3

count: 1803

size: 53050 (51.81 kB) 0.3431%

flow.ImportDeclaration.3

count: 5504

size: 196000 (191.41 kB) 1.2675%

flow.LocalDeclarationReference.3

count: 86297

size: 2922952 (2.79 MB) 18.9024%So just basic info but the React project has 33k unique declarations, 5,500 import declarations, 86k local variable uses, 3,600 type declarations, 1,117 modules, and 904 files. You can use these summaries over time to understand code complexity growth. Things may be missing here — its up to the indexer owner to run the indexer and capture all the things that need capturing. Glean is just reporting what was actually found.

The DB is in “incomplete” state, meaning we could write more data to it (e.g. if the indexer failed part way through we could restart it and resume safely, or we could shard analysis of very large projects). But before we “finish” the DB to freeze it, we need to derive additional predicates.

Note there are some limitations here: the Glean index need to know about the JavaScript and Flow modules system (in particular, names of modules to strings, and module string names to filepaths), so that imports like ‘react’ resolve to the correct module filepath.

import {useDebugValue, useEffect, useState} from 'react';

However, if we look closely at our default Flow index, the string to file facts are all empty. This will limit our ability to see through file names imported via string names (e.g. “React.js” gets imported as ‘react’).

"predicate":"flow.FileOfStringModuleExport.3","facts":[]}

which I think means I haven’t configured Flow correctly or set up the module maps properly (halp Flow folks?).

Derived predicates in Glean

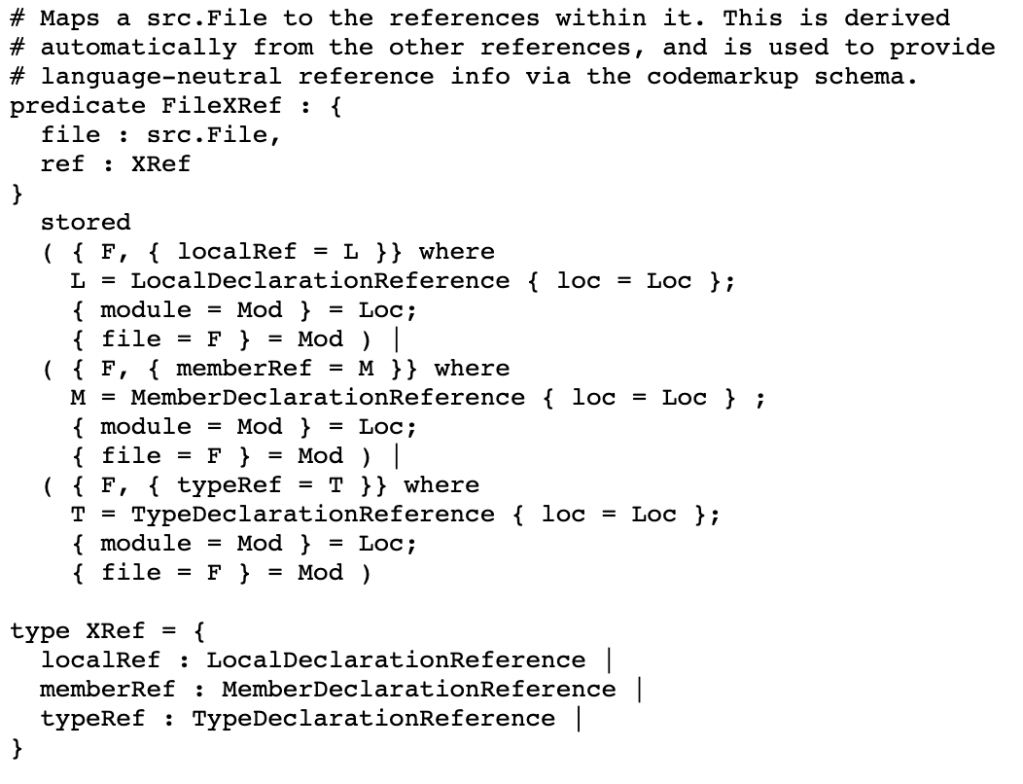

A bit like stored procedures, we can write Angle predicates that are defined in terms of other, existing predicates. This is how we do abstraction. It’s morally equivalent to defining SQL tables on the fly in terms of other tables, with some different guarantees as Glean is more like datalog than a relational system. Derived predicates can be computed on the fly, or fully generated and stored. A very common use case is to compute inverse indices (e.g. find-references is the inverse of jump-to-definition). We can index all uses of definitions, then compute the inverse by deriving.

An example is the “FileXRef” predicate in Flow, which builds an index of File name facts to cross-references in those files. You would do this to quickly discover all outbound references from a file.

This is a stored predicate. The indexer doesn’t write facts of this sort — they are defined in terms of other facts: LocalDeclarationReferences etc. To populate this index we need to derive it first. Let’s do that:

$ glean --db-root $HOME/gleandbs --schema $HOME/Glean/glean/schema/source/ derive --repo react/main flow.FileXRef

I0112 12:20:10.484172 241107 Open.hs:344] react/main: opening

I0112 12:20:10.526576 241107 rocksdb.cpp:605] loadOwnershipSets loaded 0 sets, 0 bytes

I0112 12:20:10.526618 241107 Open.hs:350] react/main: opened

I0112 12:20:10.749966 241107 Open.hs:352] react/main: schema has 799 predicates

flow.FileXRef : 0 facts

I0112 12:20:11.119634 241107 Stats.hs:223] mut_lat: 59ms [59ms] mut_thp: - [-] ded_thp: - [-] dup_thp: - [-] rnm_thp: - [-] cmt_thp: - [-] ibk_mis: - [-] tbi_mis: - [-] fbi_mis: - [-] lch_mem: 0B lch_cnt: 0

I0112 12:20:11.547000 241108 rocksdb.cpp:605] loadOwnershipSets loaded 0 sets, 0 bytes

flow.FileXRef : 112662 factsWe generated 112,662 facts about cross-references. Taking a peek at the DB now with :stat

flow.FileXRef.3

count: 112662

size: 4043028 (3.86 MB) 20.7266%

We’ve increased the DB size by 3.8M. We can derive the rest of the stored predicates now and finalize the DB. Note we have to derive in dependency order, as some stored predicates depend on the results of others. I just do this in two phases:

$ glean --db-root $HOME/gleandbs --schema $HOME/Glean/glean/schema/source/ derive --repo react/main flow.NameLowerCase flow.FileDeclaration flow.FileXRef flow.FlowEntityImportUses flow.FlowTypeEntityImportUses

I0112 12:43:12.098162 241911 Open.hs:344] react/main: opening

I0112 12:43:12.141024 241911 rocksdb.cpp:605] loadOwnershipSets loaded 0 sets, 0 bytes

I0112 12:43:12.141064 241911 Open.hs:350] react/main: opened

I0112 12:43:12.322456 241911 Open.hs:352] react/main: schema has 799 predicates

I0112 12:43:12.367130 242084 Stats.hs:223] mut_lat: 112us [112us] mut_thp: - [-] ded_thp: - [-] dup_thp: - [-] rnm_thp: - [-] cmt_thp: - [-] ibk_mis: - [-] tbi_mis: - [-] fbi_mis: - [-] lch_mem: 0B lch_cnt: 0

flow.FileDeclaration : 46594 facts

flow.FileXRef : 112662 facts

flow.FlowEntityImportUses : 3022 facts

flow.NameLowerCase : 9621 facts

flow.FlowTypeEntityImportUses : 692 facts

And freeze the data.

$ glean --db-root $HOME/gleandbs --schema $HOME/Glean/glean/schema/source finish --repo react/mainI0112 12:45:54.415550 242274 Open.hs:344] react/main: openingI0112 12:45:54.451892 242274 Open.hs:350] react/main: opened I0112 12:45:54.671070 242274 Open.hs:352] react/main: schema has 799 predicates I0112 12:45:54.701830 242270 Work.hs:506] workFinished Work {work_repo = Repo {repo_name = "react", repo_hash = "main"}, work_task = "", work_parcelIndex = 0, work_parcelCount = 0, work_handle = "glean@9adc5e80b7f6f7fb9b556fbf3d7a8774fa77d254"} I0112 12:45:54.707198 242274 Backup.hs:334] thinned schema for react/main contains src.1, flow.3 I0112 12:45:54.707224 242274 Open.hs:287] updating schema for: react/main I0112 12:45:54.824131 242274 Open.hs:299] done updating schema for open DBs I0112 12:45:54.824172 242274 Backup.hs:299] react/main: finalize: finished

The db is now frozen and cannot be changed.

> :list

react/main (complete)

Created: 2022-01-12 02:01:04 UTC (45 minutes ago)

Completed: 2022-01-12 02:45:55 UTC (51 seconds ago)Poking around a Glean database

We can look at this data by querying in the Glean shell. E.g. to count all xrefs in ReactHooks.js.,

react> :limit 0

react> :count flow.FileXRef { file = "react/packages/react/src/ReactHooks.js" }

134 results, 605 facts, 2.84ms, 310224 bytes, 1032 compiled bytesTo see say, only local references, and just the names of the definitions they point at:

react> N where flow.FileXRef { file = "react/packages/react/src/ReactHooks.js", ref = { localRef = { declaration = { name = N } } } }

{ "id": 14052, "key": "deps" }

{ "id": 4327, "key": "callback" }

{ "id": 13980, "key": "dispatcher" }

{ "id": 9459, "key": "create" }

{ "id": 5957, "key": "ReactCurrentDispatcher" }

{ "id": 1353, "key": "getServerSnapshot" }

{ "id": 1266, "key": "getSnapshot" }

{ "id": 1279, "key": "subscribe" }

{ "id": 14130, "key": "initialValue" }

{ "id": 3073, "key": "init" }

{ "id": 5465, "key": "source" }...Or we could query for types used in the file:

react> N where flow.FileXRef { file = "react/packages/react/src/ReactHooks.js", ref = { typeRef = { typeDeclaration = { name = N } } } }

{ "id": 1416, "key": "T" }

{ "id": 3728, "key": "A" }

{ "id": 14493, "key": "Dispatch" }

{ "id": 14498, "key": "S" }

{ "id": 14505, "key": "I" }

{ "id": 14522, "key": "BasicStateAction" }

{ "id": 3318, "key": "ReactContext" }

{ "id": 2363, "key": "Snapshot" }

{ "id": 14551, "key": "AbortSignal" }

{ "id": 6196, "key": "Source" }

{ "id": 8059, "key": "Dispatcher" }

{ "id": 7362, "key": "MutableSourceSubscribeFn" }

{ "id": 7357, "key": "MutableSourceGetSnapshotFn" }

{ "id": 7369, "key": "MutableSource" }Ok this is starting to get useful.

We’re doing some basic code analysis on the fly in the shell. But I had to know / explore the flow schema to make these queries. That doesn’t really scale if we have a client that needs to look at multiple languages — we can’t reasonably expect the client to know how declarations and definitions etc are defined in every single language. Luckily, Glean defines abstractions for us in code.angle and codemarkup.angle to generically query for common code structures.

Querying generically

Entities are an Angle abstraction for “things that have definitions” in programming languages — like types, modules, classes etc. There are some common queries we need across any language:

- files to their cross-references , of any entity sort

- references to definitions

- definitions in this file

- entity to its definition location and file

For these common operations, a language-agnostic layer is defined in codemarkup.angle, taking care of all the subtleties resolving imports/headers/ .. for each language. E.g. for find-references, there’s a derived “EntityUses” predicate for a bunch of languages here: https://github.com/facebookincubator/Glean/blob/main/glean/schema/source/codemarkup.angle#L259

We can use this to query Flow too E.g. how many known entities are defined or declared in ReactHooks.js? 99.

react> :count codemarkup.FileEntityLocations { file = "react/packages/react/src/ReactHooks.js" }

99 results, 354 facts, 13.15ms, 9297888 bytes, 54232 compiled bytesAnd how many uses (xrefs) are in that file? 132.

:count codemarkup.FileEntityXRefLocations { file = "react/packages/react/src/ReactHooks.js" }

132 results, 329 facts, 40.44ms, 27210432 bytes, 160552 compiled bytesQuick and dirty find-references for JavaScript

So we probably have enough now to do some basic semantic code search. i.e. not just textual search like grep, but semantically precise search as the compiler would see it. Let’s pick an entity and find its references. Since React is basically purely functional programming for UIs, let’s look for how often state is used — find-references to useState.

First, we get the entity. This tells us the definition site. The Glean key of the entity is $575875. and its structure is as below. Note the compound query here (the semicolon), where I name the entity ‘E’, then filter on its body for only those ‘Es’ with the name “useState”

react> E where codemarkup.FileEntityLocations { file = "react/packages/react/src/ReactHooks.js", entity = E } ; { flow = { decl = { localDecl = { name = "useState" } } } } = E

{

"id": 575875,

"key": {

"flow": {

"decl": {

"localDecl": {

"id": 14269,

"key": {

"name": { "id": 1317, "key": "useState" },

"loc": {

"id": 14268,

"key": {

"module": {

"id": 12232,

"key": {

"file": { "id": 12231, "key": "react/packages/react/src/ReactHooks.js" }

}

},

"span": { "start": 2841, "length": 8 }Now to direct references to this elsewhere in React, we add codemarkup.EntityUses { target = E, file = F } to the query and return the files F:

react> F where codemarkup.FileEntityLocations { file = "react/packages/react/src/ReactHooks.js", entity = E } ; { flow = { decl = { localDecl = { name = "useState" } } } } = E ; codemarkup.EntityUses { target = E, file = F }

{ "id": 10971, "key": "react/packages/react/src/React.js" }

1 results, 1 facts, 9.19ms, 5460072 bytes, 8140 compiled bytes

So that finds the first-order direct reference to useState from ReactHooks.js to React.js.. To find the actual uses in the rest of the react package, we need a proper index for module names to strings, so that an import of ‘react’ can be resolved to ‘React.js’ and thus to the origin. Glean knows about this, but my indexer doesn’t have StringToModule facts — I need the flow indexer to generate these somehow.

For now, this is enough. We are alive.

In the next part I’ll look at writing a simple code search client to the Glean server running on the VM.